Implementing a Distributed Program in MPI

#include "mpi.h"

#include <stdio.h>

#include <stdlib.h>

#define MASTER 0

int main (int argc, char *argv[]) {

int numtasks, rank, len;

char hostname[MPI_MAX_PROCESSOR_NAME];

MPI_Init(&argc, &argv);

MPI_Comm_size(MPI_COMM_WORLD, &numtasks);

MPI_Comm_rank(MPI_COMM_WORLD,&rank);

MPI_Get_processor_name(hostname, &len);

if (rank == MASTER)

printf("MASTER: Number of MPI tasks is: %d\n",numtasks);

else

printf("WORKER: Rank: %d\n",rank);

MPI_Finalize();

}

A communicator (MPI_Comm) represents a group of processes that communicate with each other. MPI_COMM_WORLD represents the default communicator, to which all processes belong.

Functions:

- MPI_Init - initializes the MPI program, creating the context in which processes run. Command-line arguments are passed to the context for process execution.

- MPI_Comm_size - a function that determines the number of processes (numtasks) running within the communicator (usually MPI_COMM_WORLD).

- MPI_Comm_rank - a function that determines the identifier (rank) of the current process within the communicator.

- MPI_Get_processor_name - determines the processor's name.

- MPI_Finalize - triggers the termination of the MPI program.

During data exchange between processes, it is always necessary to specify their type. In MPI, the MPI_Datatype enum is used, which maps to data types in C/C++, as shown in the table below:

| MPI_Datatype | Equivalent in C/C++ |

|---|---|

| MPI_INT | int |

| MPI_LONG | long |

| MPI_CHAR | char |

| MPI_FLOAT | float |

| MPI_DOUBLE | double |

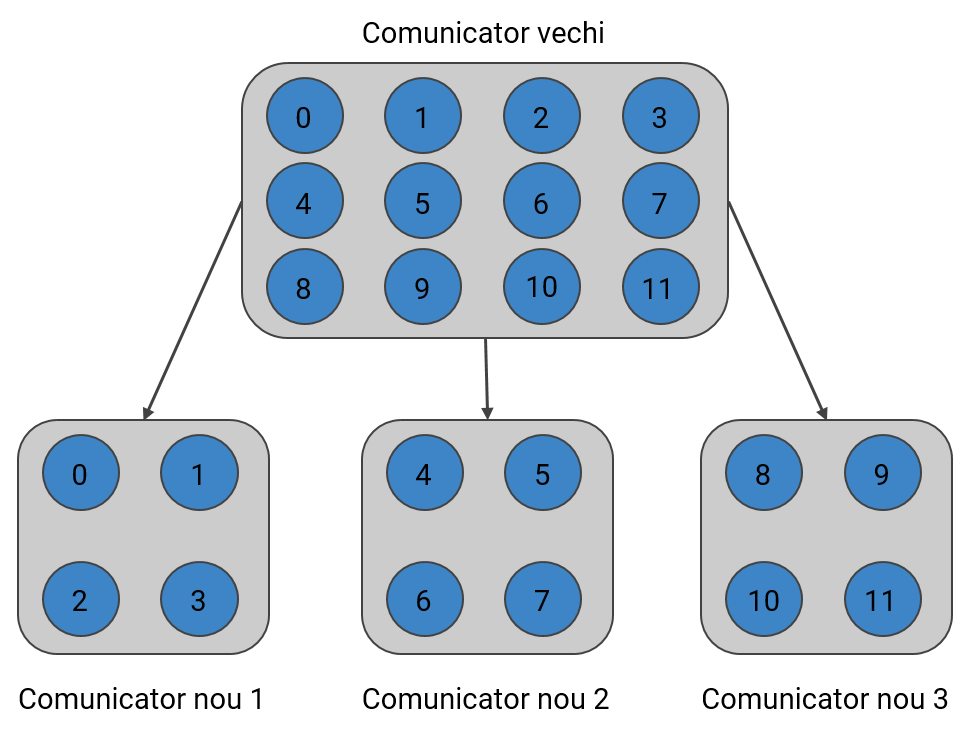

We can create communicators from another communicator using the MPI_Comm_split function, which divides a communicator into multiple smaller communicators. The function's signature is as follows:

int MPI_Comm_split(MPI_Comm comm, int color, int key, MPI_Comm * newcomm)

Where:

- comm - the communicator being split

- color - an identifier of the new communicator, to which a process belongs (usually rank_old_process / size_new_communicator)

- key - the new rank of the process within the new communicator (usually rank_old_process % size_new_communicator)

- newcomm - the newly formed communicator

Below is an illustration of how MPI_Comm_split works: