Receive

MPI_Recv

MPI_Recv represents the function through which a process receives data from another process. The function signature is as follows:

int MPI_Recv(void* data, int count, MPI_Datatype datatype, int source, int tag, MPI_Comm communicator, MPI_Status* status)

Where:

- data (↑) - represents the data received from the source process by the destination process

- count (↓) - the size of the received data

- datatype (↓) - the data type of the received data

- source (↓) - the rank / identifier of the source process that sends the data

- tag (↓) - message identifier

- communicator (↓) - the communicator within which data is sent between the two processes

- status - contains information about the received message, with MPI_Status being a structure that contains information about the received message (source, message tag, message size). If you don't want to use information about the received message, you can use MPI_STATUS_IGNORE, which ignores the message status.

In the case where process P calls the MPI_Recv() function, it will block until it receives all the expected data, so if it receives nothing or what it receives is insufficient, P will remain blocked. In other words, MPI_Recv() only completes when the buffer has been filled with the expected data.

The MPI_Status structure includes the following fields:

- int count - the size of the received data

- int MPI_SOURCE - the identifier of the source process that sent the data

- int MPI_TAG - the message tag of the received message

MPI_Recv is a blocking function, meaning the program can block until the action of sending the message to the source process is performed.

An example program where one process sends a message to another process:

#include "mpi.h"

#include <stdio.h>

#include <stdlib.h>

int main (int argc, char *argv[])

{

int numtasks, rank, len;

char hostname[MPI_MAX_PROCESSOR_NAME];

MPI_Init(&argc, &argv);

MPI_Comm_size(MPI_COMM_WORLD, &numtasks); // Total number of processes.

MPI_Comm_rank(MPI_COMM_WORLD,&rank); // The current process ID / Rank.

MPI_Get_processor_name(hostname, &len);

srand(42);

int random_num = rand();

printf("Before send: process with rank %d has the number %d.\n", rank,

random_num);

if (rank == 0) {

MPI_Send(&random_num, 1, MPI_INT, 1, 0, MPI_COMM_WORLD);

} else {

MPI_Status status;

MPI_Recv(&random_num, 1, MPI_INT, 0, 0, MPI_COMM_WORLD, &status);

printf("Process with rank %d, received %d with tag %d.\n",

rank, random_num, status.MPI_TAG);

}

printf("After send: process with rank %d has the number %d.\n", rank,

random_num);

MPI_Finalize();

}

When a process X sends a message to a process Y, the message tag T in MPI_Send, executed by process X, must be the same as the message tag in MPI_Recv, executed by process Y, because process Y expects a message with tag T. If the tags are different, the program will deadlock.

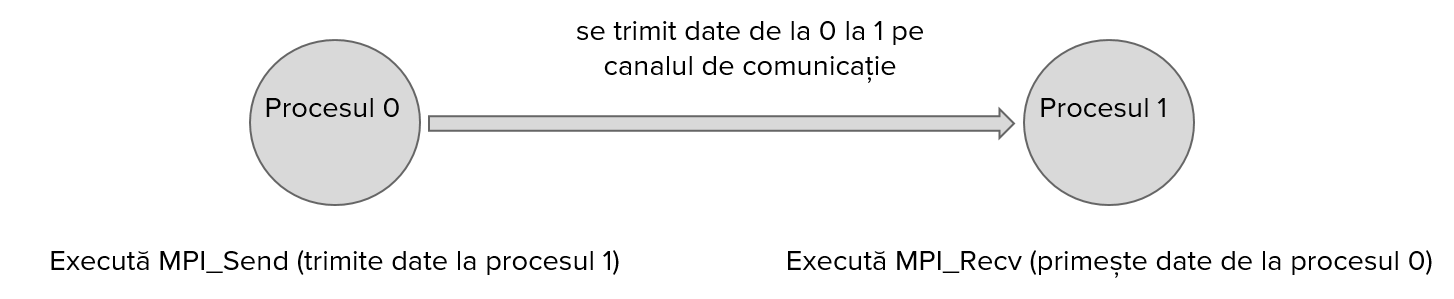

An illustration of how MPI_Send and MPI_Recv functions work together:

Below is an example in which one process sends an entire array of 100 elements to another process:

Example

#include "mpi.h"

#include <stdio.h>

#include <stdlib.h>

int main (int argc, char *argv[])

{

int numtasks, rank, len;

int size = 100;

char hostname[MPI_MAX_PROCESSOR_NAME];

int arr[size];

MPI_Init(&argc, &argv);

MPI_Comm_size(MPI_COMM_WORLD, &numtasks);

MPI_Comm_rank(MPI_COMM_WORLD,&rank);

MPI_Get_processor_name(hostname, &len);

srand(42);

if (rank == 0) {

for (int i = 0; i < size; i++) {

arr[i] = i;

}

printf("Process with rank [%d] has the following array:\n", rank);

for (int i = 0; i < size; i++) {

printf("%d ", arr[i]);

}

printf("\n");

MPI_Send(arr, size, MPI_INT, 1, 1, MPI_COMM_WORLD);

printf("Process with rank [%d] sent the array.\n", rank);

} else {

MPI_Status status;

MPI_Recv(arr, size, MPI_INT, 0, 1, MPI_COMM_WORLD, &status);

printf("Process with rank [%d], received array with tag %d.\n",

rank, status.MPI_TAG);

printf("Process with rank [%d] has the following array:\n", rank);

for (int i = 0; i < size; i++) {

printf("%d ", arr[i]);

}

printf("\n");

}

MPI_Finalize();

}